1/31/13

tegan and sara

omg omg omg... I mean, when I get in the car, I often check if Spotify has any new albums, and it is always exciting when I see a new album out from a band I like.

eye-dia

When I woke up this morning, I thought: my eye feels so weird I bet I'll visibly see something. I looked in the mirror, and saw something like the dotted region above. Note that I'm opening both eyes wide.

This swelling seemed to reduce during the day, but now that I took a nap this evening, it's back.

I'd like a service that I can send pictures like this to that will tell me what's wrong, and if this is something to worry about. This is not my idea, and in fact, a friend of mine has in the past sent a picture of sorts to a doctor to diagnose, but they happened to know the doctor.

1/30/13

work notes

the saga continues...

Although these notes are a bit cryptic about what I'm actually doing, they are enough for a friend at work to read and give me feedback about — which turns out to be a pretty good asynchronous communication mechanism — and he gave me some pointers. For one, it turns out I should be limiting my search for duplicates to duplicates by the same author. I reran my process and now get 14694 duplicates, which accounts for about a third of all duplicates.

Now I need to rebuild my features vector template with room for a "duplicate" feature. The feature-vector-template also has counts for various features, most of which are words, so that I can use them in some tfidf capacity if desired — though I think I'll begin with just binary features and see where I'm at, now that the training dataset is significantly larger.

Ok, I would have 288,893 features, but because I eliminate features that don't occur more than 100 times, I am left with 9032 features.

At this point, I have a feature vector template, which is just a list of features. Now I need to go back through all the data items and build a feature vector for each one. I think I need this two-pass process because I don't know until after the first pass is done which features I'm going to eliminate.

Hm.. for some reason I have usually preferred global functions for things like map and filter, but as I use it more, I'm less convinced, because I often get things like this:

_.map(_.sortBy(_.filter(_.map(_.pairs(v), function (e) {

return [featureToIndex[e[0]], e[1]]

}), function (e) {

return e[0]

}), function (e) {

return e[0]

}), function (e) {

return e[0] + ":" + ((e[1] % 1 == 0) ? e[1] : e[1].toFixed(6))

}).join(' ')

which requires too much inside-out reading for me. I forget why I wanted the global functions. It had to do with some danger in touching JavaScript's prototype functions... anyway, I think I'll try it and re-encounter whatever problem I faced before.

But back to features... it's processing. About half-way there. Ok, done. 260mb. Not bad.

Ok, let's try running this thing: train -v 5 feature_vectors.txt... oh, forgot to install liblinear on the ec2 machine..

trying again.. ./liblinear/train -v 5 feature_vectors.txt... hm... "Wrong input format at line 1". Ah. I forgot to join with newlines. Now, when I examined the partial output to make sure everything was working before letting it sit for 5 minutes building the large vector, I only looked at a single vector, so I didn't see the lacking newline. It impresses me sometimes how I trip over everything that can be tripped over, over and over... I've been doing this for years. Years. And still, I'm liable to make every mistake possible :)

Great, ok, it's running! Now the moment of truth.. will liblinear be able to process a 260mb training file on a medium ec2 machine... there are dots appearing... I think that means it's thinking... Hm... I have no idea how long it will take, and I may go to bed before it finishes. I think maybe I'll cancel it, run it in "screen", so I can log out if I need to and let it run... Ok, there it goes...

Although these notes are a bit cryptic about what I'm actually doing, they are enough for a friend at work to read and give me feedback about — which turns out to be a pretty good asynchronous communication mechanism — and he gave me some pointers. For one, it turns out I should be limiting my search for duplicates to duplicates by the same author. I reran my process and now get 14694 duplicates, which accounts for about a third of all duplicates.

Now I need to rebuild my features vector template with room for a "duplicate" feature. The feature-vector-template also has counts for various features, most of which are words, so that I can use them in some tfidf capacity if desired — though I think I'll begin with just binary features and see where I'm at, now that the training dataset is significantly larger.

Ok, I would have 288,893 features, but because I eliminate features that don't occur more than 100 times, I am left with 9032 features.

At this point, I have a feature vector template, which is just a list of features. Now I need to go back through all the data items and build a feature vector for each one. I think I need this two-pass process because I don't know until after the first pass is done which features I'm going to eliminate.

Hm.. for some reason I have usually preferred global functions for things like map and filter, but as I use it more, I'm less convinced, because I often get things like this:

_.map(_.sortBy(_.filter(_.map(_.pairs(v), function (e) {

return [featureToIndex[e[0]], e[1]]

}), function (e) {

return e[0]

}), function (e) {

return e[0]

}), function (e) {

return e[0] + ":" + ((e[1] % 1 == 0) ? e[1] : e[1].toFixed(6))

}).join(' ')

which requires too much inside-out reading for me. I forget why I wanted the global functions. It had to do with some danger in touching JavaScript's prototype functions... anyway, I think I'll try it and re-encounter whatever problem I faced before.

But back to features... it's processing. About half-way there. Ok, done. 260mb. Not bad.

Ok, let's try running this thing: train -v 5 feature_vectors.txt... oh, forgot to install liblinear on the ec2 machine..

trying again.. ./liblinear/train -v 5 feature_vectors.txt... hm... "Wrong input format at line 1". Ah. I forgot to join with newlines. Now, when I examined the partial output to make sure everything was working before letting it sit for 5 minutes building the large vector, I only looked at a single vector, so I didn't see the lacking newline. It impresses me sometimes how I trip over everything that can be tripped over, over and over... I've been doing this for years. Years. And still, I'm liable to make every mistake possible :)

Great, ok, it's running! Now the moment of truth.. will liblinear be able to process a 260mb training file on a medium ec2 machine... there are dots appearing... I think that means it's thinking... Hm... I have no idea how long it will take, and I may go to bed before it finishes. I think maybe I'll cancel it, run it in "screen", so I can log out if I need to and let it run... Ok, there it goes...

headache

This is a shot taken from my mind's eye. My mind's eye is sortof behind my eyes, which you can see as sortof circles in the center.

Do you see the headache?

1/29/13

work notes

still on this data processing / machine learning job...

I'm classifying a bunch of documents. I had been marking things as yes or no, but we actually have more information than just yes, like why. I thought maybe the classifier should try to say why as well. I was showing data about the counts of different whys to my friend at work, and he mentioned that the real purpose of the classifier is to identify nos that don't need to be processed further. Part of my brain was thinking that all along, yet somehow it didn't occur to me that the why is irrelevant for this purpose. So, good.

I need to identify duplicates. Or rather, I need to make sure the classifier doesn't mark duplicates as nos. This will probably need to be another feature, if not a first-pass-algorithm. Let's see how many duplicates there are. Hm.. 37,040 are exact duplicates, which is about 6%. What if we convert everything to lower-case, and remove punctuation.. 43,678, which is about 18% more. Not too many more, but worth doing I suppose.

Programming note: the data is in about 15 json files. Each is an array. I could load all these arrays into memory and concatenate them, but that seems to take time, perhaps because it is shuffling around 1.7gb of memory. I think it is faster to load each file, process all its items, and then move to the next one. (ahh, the trains are back.. sigh.. the heater is also on, but the trains are louder.)

So what is a good programming abstraction for when I want to feed a bunch of items into a thing one at a time, and then get a final assessment of all the items back.. here we go:

var countDups = Fiber(function (d) {

var descs = {}

var ouch = 0

while (d) {

var hash = _.md5(d.description.toLowerCase().replace(/\W+/g, ''))

if (!_.setAdd(descs, hash)) {

ouch++

}

d = Fiber.yield()

}

console.log("ouchies: " + ouch)

})

I'm classifying a bunch of documents. I had been marking things as yes or no, but we actually have more information than just yes, like why. I thought maybe the classifier should try to say why as well. I was showing data about the counts of different whys to my friend at work, and he mentioned that the real purpose of the classifier is to identify nos that don't need to be processed further. Part of my brain was thinking that all along, yet somehow it didn't occur to me that the why is irrelevant for this purpose. So, good.

I need to identify duplicates. Or rather, I need to make sure the classifier doesn't mark duplicates as nos. This will probably need to be another feature, if not a first-pass-algorithm. Let's see how many duplicates there are. Hm.. 37,040 are exact duplicates, which is about 6%. What if we convert everything to lower-case, and remove punctuation.. 43,678, which is about 18% more. Not too many more, but worth doing I suppose.

Programming note: the data is in about 15 json files. Each is an array. I could load all these arrays into memory and concatenate them, but that seems to take time, perhaps because it is shuffling around 1.7gb of memory. I think it is faster to load each file, process all its items, and then move to the next one. (ahh, the trains are back.. sigh.. the heater is also on, but the trains are louder.)

So what is a good programming abstraction for when I want to feed a bunch of items into a thing one at a time, and then get a final assessment of all the items back.. here we go:

var countDups = Fiber(function (d) {

var descs = {}

var ouch = 0

while (d) {

var hash = _.md5(d.description.toLowerCase().replace(/\W+/g, ''))

if (!_.setAdd(descs, hash)) {

ouch++

}

d = Fiber.yield()

}

console.log("ouchies: " + ouch)

})

...now I just call countDups.run(d) for each data item, and countDups.run(null) when I'm done. Node fibers are so awesome.

Ok, now I need to convert some scattered test code for extracting features and such into these well-contained utility functions... (hm.. I had typed _.each(text.match(/[a-zA-Z]+/g), ...) and worried that this might encounter the "length" issue in _.each if text happened to contain the word "length", but it doesn't, because match itself is returning an array... but the fact that I keep needing to think about this issue is annoying. So annoying that I may switch away from underscore :( ... ugg... and there it is: _.each(getWordBag(text), ...). A word bag is a hashmap mapping words to counts, and it might contain the word "length". Do I really want to move away from underscore for this? Can I convince the underscore person that this is an issue? Ok, I just submitted an issue. We'll see what comes of it. For now, I'll work around this, I guess... Hm... I guess underscore says "no".

do'in it wrong

I submitted an issue to underscore. The response: "Please search for duplicates. Practically every second issue is a duplicate of this one. And it has been turned down every time."

My emotional response to this is: "Alas, I thought I was helping, but I messed up. Now people think I'm stupid and annoying."

I suppose my response should be: "Ok, thanks for the tip, next time I'll search through all previous issues to make sure my issue hasn't already been submitted."

But instead I feel humiliated, and want to somehow tell the guy: "Hey, I'm not stupid!" So I respond with a somewhat emotionally charged thing saying effectively: "If there are lots of duplicates, doesn't that mean it's worth doing something about?"

But then after writing this post, I delete my response, and close the issue, reluctant to post any more issues.

My emotional response to this is: "Alas, I thought I was helping, but I messed up. Now people think I'm stupid and annoying."

I suppose my response should be: "Ok, thanks for the tip, next time I'll search through all previous issues to make sure my issue hasn't already been submitted."

But instead I feel humiliated, and want to somehow tell the guy: "Hey, I'm not stupid!" So I respond with a somewhat emotionally charged thing saying effectively: "If there are lots of duplicates, doesn't that mean it's worth doing something about?"

But then after writing this post, I delete my response, and close the issue, reluctant to post any more issues.

no sound

This shot was taken from the window at the foot of my bed.

Note the trains.

Usually, at least one of these trains is idling it's engine in preparation for departure. But today, at 3am, as I was sleeping, the heater in our apartment turned off, and there was no sound at all.

I woke up with a start, and I literally had the thought: am I dead?

1/28/13

multiplying beta distributions

Beta distributions seem like a cool way to keep track of how biased you think coins are. And if you want to flip multiple coins simultaneously, it seems fine to calculate the probability of getting all heads by multiplying together the means of the beta distributions for each coin.

Of course, that will just give you a number: the probability of getting all heads. But we actually know more than that. Because each coin is a beta distribution, there is some distribution over how likely it is that all the coins will land on heads.

Intuitively, it seems like we should be able to get this distribution by multiply the beta distributions for each coin together somehow. But how?

As far as I can tell, it is "hard". There's no nice formula for it. But these people came up with a nice approximation, which they use in their "subjective logic" system.

I implemented a test of this in javascript, and you can see above a demo of multiplying together three beta distributions. Note that it is showing an inverse cdf of the result. This is not because I like inverse cdfs more than pdfs (though I do), but rather because the calculation was easier that way (which is part of why I like inverse cdfs more than pdfs).

The red curve is the "real" result. This is approximated numerically using lots of integration. The blue curve is the approximation using subjective logic. Note that they are not the same. This difference appears to be real, as opposed to an insufficient numerical approximation. In fact, as far as I can gather, the real result is not really a beta distribution at all, though it is very close to one.

pdf, cdf, inverse cdf

The average height of a person is 5'4". Here's a pdf:

If you throw a dart at the pdf, and it manages to land under the curve, then it is most likely to land at a common height.

Here's a cdf:

The curve shows what percent of people are less than a given height.

Now, if you literally put all humans in a line-up sorted by height, you'd get this:

Which is an inverse cdf.

I like this curve, because it is easy to create. If you have a bunch of human heights, you just sort them, and plot that. It is also meaningful. It shows what it would look like if you lined up a bunch of humans. And a wide/flat part of the curve means that there's a lot of humans with that height.

Here's a cdf:

The curve shows what percent of people are less than a given height.

Now, if you literally put all humans in a line-up sorted by height, you'd get this:

Which is an inverse cdf.

I like this curve, because it is easy to create. If you have a bunch of human heights, you just sort them, and plot that. It is also meaningful. It shows what it would look like if you lined up a bunch of humans. And a wide/flat part of the curve means that there's a lot of humans with that height.

risc

I like the idea of a risc processor — less instructions, simpler.

I remember looking at some new processor, I forget which one, but it was more expensive and slower. That is, it had a slower clock rate. Apparently it got more done on each clock cycle in a non-risc way, and seemed to be faster overall.

I don't think my brain is a risc processor. I feel like my brain has an incredibly slow clock cycle.

Of course, I suppose all brains are non-risc processors.

But I feel like mine is slower than most. People definitely notice that I talk more slowly. If I'm talking quickly, it's because I've already thought something through ahead of time.

My mind is also like a lumbering giant, lumbering in some direction, and it is very hard to redirect its lumber. I think this is why I am more efficient when I focus on one thing.

focus

I feel that I am much more efficient when I'm focussing on one thing. I'm going to try doing that more. Of course, I don't want everything to fall to pieces, so perhaps if I surround my main line of focus with little blips of side stuff.

Right now I'm focussing on a machine learning task for work, but I'm writing this blog post on the side. I'll go back and do more machine learning work, and when I need a break, I'll do some other small non-main-line task.

data processing notes

notes..

I've been gathering data from a database using node-postgres. One thing that sucks about javascript is multiline strings. I have a large SQL query that would be much more readable and manageable over multiple lines. Python and Perl have good support for this. I think javascript would benefit. As a side note, I always wonder why regular double-quoted strings can't go over multiple lines.

The resulting data is about 1gb of json. I've been doing preliminary processing on my own machine, but now I'm trying to put everything on an ec2 machine.

Usually I feel fine doing development in Dropbox, but when dealing with large datasets, I feel a bit worried. I'm not sure why. I have 100gb of space. It shouldn't be a problem.

Macfusion has been great — it makes the ec2 machine look like a harddrive on my mac.

The json data is 814mb on disk, but expands to about 1.76gb in memory. Not sure how much of that is ungarbage collected stuff, since I'm doing some array concatenation to build the final data array from multiple files. It also takes 4.5 minutes to load. I feel like that should be reducible.

This ec2 machine won't need to do much most of the time. I could use a small. But every day, I want to load and process this dataset, which will require at least a medium. I wonder how easy people have made it to create a large ec2 machine, send a bunch of data there, process it, and send the results back.

I discovered another field I needed, so I had to re-download everything and re-copy it. The ec2 machine itself doesn't have access to the database. I'm not sure what the right way to fix that is. Giving the ec2 machine database access is not trivial, and may involve some bureaucracy.

I need a word stemmer in node. I type "node stemmer" into google. I get "porter-stemmer", a stemmer package for node. Excellent. Yes, I know this happens with many languages, and is not new — there evolves an ecosystem of packages for anything you want to do — but it is still cool.

What is the longest word? I want to remove stuff people type like "asdflisjflsekfjlaskdfjilewghlegjlsijislehg" that isn't a word, and isn't likely to be typed by other people. Wikipedia says Floccinaucinihilipilification is the "Longest unchallenged nontechnical word". Great. Anything over "Floccinaucinihilipilification".length is rejected. Or perhaps I can just include everything at first, and remove things that don't appear often enough.

I was about to worry about the issue with underscore's _.each treating hashmaps with a "length" as arrays, since I'm going to be throwing random words as keys into hashmaps, and one of those words might be "length", but then I realize I'm adding a prefix to all my word-keys, so I guess that's not an issue. For now.

Hm.. I just made my first pull request. I didn't even need to clone the project. I just saw an error in the readme file, I opened the readme file online, edited the file, and "proposed the change". Go github. And of course I actually modified it wrong, so I closed that pull request and submitted another one.

I'm building a large feature vector. I count how many examples use each feature, and filter away features that are possessed by fewer than 10 items. That reduces the features from 288891 to 30753.

I feel like it might be worth plotting a little graph to show many many features I would get for different cutoff choices, and choosing something at the "elbow" of the curve. Hm. How much effort would that take?

Oops. I was looking at how many times different words were used, and it's good I did, because I had meant to be counting how many documents include each word, but instead I'm counting how many times the word appears. I noticed this because the word "a" appears over a million times, but I don't have over a million documents.

Hm.. I usually don't have test cases. I find them cumbersome. But I've been using test cases for myutil2.js, in a file called myutil2_tests.html, and it is pretty convenient. In this case, I feel like it isn't actually more work, since I need to test the functions I add anyway, so I just put those tests into that file.

Another reason that the test cases work out is because I'm having everything point to a single utility library. If I was copying the utility library around, then I feel like it would be easier to just test stuff in the code that was trying to use it, as I usually do. But in this case, to do that, I would need to make a change, check it in, test it where I'm using it, and then check in fixes.. meaning that the checked in code would be broken sometimes, which seems dangerous.

Incidentally, I use myutil2.js from node projects as well — like the data processing I've been writing — and I load it dynamically like this: eval(_.wget('https://raw.github.com/dglittle/myutil/master/myutil2.js')). Of course, this relies on _.wget, which is not part of underscore, but is rather something I add to it in a nodeutil.js, which is not in a centralized place on github. I'm not sure what a good way to do that would be.. perhaps I could have most of the nodeutil.js stuff on github, except for the wget function itself, but much of what nodeutil.js does is the wget function.

Ok.. I plotted the curve showing how many features we would keep with each threshold value. It is a very very sharp elbow. So sharp that it's not worth plotting the curve because it just looks like a line going down, and then a line going to the side. After some poking around with it — zooming in — I'm going to go with 100 as the cutoff, which will leave about 7000 features.

I've been gathering data from a database using node-postgres. One thing that sucks about javascript is multiline strings. I have a large SQL query that would be much more readable and manageable over multiple lines. Python and Perl have good support for this. I think javascript would benefit. As a side note, I always wonder why regular double-quoted strings can't go over multiple lines.

Usually I feel fine doing development in Dropbox, but when dealing with large datasets, I feel a bit worried. I'm not sure why. I have 100gb of space. It shouldn't be a problem.

Macfusion has been great — it makes the ec2 machine look like a harddrive on my mac.

The json data is 814mb on disk, but expands to about 1.76gb in memory. Not sure how much of that is ungarbage collected stuff, since I'm doing some array concatenation to build the final data array from multiple files. It also takes 4.5 minutes to load. I feel like that should be reducible.

This ec2 machine won't need to do much most of the time. I could use a small. But every day, I want to load and process this dataset, which will require at least a medium. I wonder how easy people have made it to create a large ec2 machine, send a bunch of data there, process it, and send the results back.

I discovered another field I needed, so I had to re-download everything and re-copy it. The ec2 machine itself doesn't have access to the database. I'm not sure what the right way to fix that is. Giving the ec2 machine database access is not trivial, and may involve some bureaucracy.

I need a word stemmer in node. I type "node stemmer" into google. I get "porter-stemmer", a stemmer package for node. Excellent. Yes, I know this happens with many languages, and is not new — there evolves an ecosystem of packages for anything you want to do — but it is still cool.

What is the longest word? I want to remove stuff people type like "asdflisjflsekfjlaskdfjilewghlegjlsijislehg" that isn't a word, and isn't likely to be typed by other people. Wikipedia says Floccinaucinihilipilification is the "Longest unchallenged nontechnical word". Great. Anything over "Floccinaucinihilipilification".length is rejected. Or perhaps I can just include everything at first, and remove things that don't appear often enough.

I was about to worry about the issue with underscore's _.each treating hashmaps with a "length" as arrays, since I'm going to be throwing random words as keys into hashmaps, and one of those words might be "length", but then I realize I'm adding a prefix to all my word-keys, so I guess that's not an issue. For now.

Hm.. I just made my first pull request. I didn't even need to clone the project. I just saw an error in the readme file, I opened the readme file online, edited the file, and "proposed the change". Go github. And of course I actually modified it wrong, so I closed that pull request and submitted another one.

I'm building a large feature vector. I count how many examples use each feature, and filter away features that are possessed by fewer than 10 items. That reduces the features from 288891 to 30753.

I feel like it might be worth plotting a little graph to show many many features I would get for different cutoff choices, and choosing something at the "elbow" of the curve. Hm. How much effort would that take?

Oops. I was looking at how many times different words were used, and it's good I did, because I had meant to be counting how many documents include each word, but instead I'm counting how many times the word appears. I noticed this because the word "a" appears over a million times, but I don't have over a million documents.

Hm.. I usually don't have test cases. I find them cumbersome. But I've been using test cases for myutil2.js, in a file called myutil2_tests.html, and it is pretty convenient. In this case, I feel like it isn't actually more work, since I need to test the functions I add anyway, so I just put those tests into that file.

Another reason that the test cases work out is because I'm having everything point to a single utility library. If I was copying the utility library around, then I feel like it would be easier to just test stuff in the code that was trying to use it, as I usually do. But in this case, to do that, I would need to make a change, check it in, test it where I'm using it, and then check in fixes.. meaning that the checked in code would be broken sometimes, which seems dangerous.

Incidentally, I use myutil2.js from node projects as well — like the data processing I've been writing — and I load it dynamically like this: eval(_.wget('https://raw.github.com/dglittle/myutil/master/myutil2.js')). Of course, this relies on _.wget, which is not part of underscore, but is rather something I add to it in a nodeutil.js, which is not in a centralized place on github. I'm not sure what a good way to do that would be.. perhaps I could have most of the nodeutil.js stuff on github, except for the wget function itself, but much of what nodeutil.js does is the wget function.

Ok.. I plotted the curve showing how many features we would keep with each threshold value. It is a very very sharp elbow. So sharp that it's not worth plotting the curve because it just looks like a line going down, and then a line going to the side. After some poking around with it — zooming in — I'm going to go with 100 as the cutoff, which will leave about 7000 features.

1/25/13

begging

Boston

I lived in Boston for seven years, and never once gave money to someone begging for money. [update: I read this line and think people may think I say it with pride. I am not proud of it. I am not unproud of it. It is just what happened.] I had developed incredibly thick skin against begging. A homeless person could meet me face to face on the street, and my gaze would look through them, as if they weren't there. Of course, the real reason for that was to avoid getting trapped in a conversation with a crazy person, which is hard to exit once entered without simply walking away mid-sentence.

There was also a guy who delivered a heart-wrenching oratory to everyone at a subway stop — he was trying to break out of being a deadbeat, and he had an interview setup at Macy's in a couple hours, but he needed money for a haircut. Just $10. I was tempted to pitch in. The guy really seemed genuine. But I didn't. Incidentally, I saw that same guy give a similarly well-delivered oratory another time in the future, so I'm less convinced his story was true.

San Francisco

After three months living in San Francisco, I have given away two ten dollar-bills, and a blanket.

The first incident happened as I was throwing out my trash one night. The event was more personal somehow. It was at my home. Nobody else was around. I told the guy I didn't have my wallet on me at the moment, which, it occurred to me, was not a very good excuse given that I could easily retrive it. Then the guy asked if I had a blanket. It occurred to me that I had thrown a blanket into the trash recently, so I felt bad denying him one.

I told him I would get him a blanket. I did. I also got him $10. And when I handed over the goods, I asked him "what do you do?" This seemed like an awkward question, since it didn't seem like he "did" anything. But he said he sold pot, but the police had confiscated his stash recently, so he didn't have any income at the moment. He was trying to get a court order to get his stash back, but it was taking time. He showed me his license for selling pot. I asked him about his opinion on the legalization of pot — since it affected his career. He said he voted for legalization, but that if it was legalized, he would probably need to find another job.

Now just today, I was dropping someone off in San Francisco near a donut shop, and a guy approached me on the driver's side asking for two dollars for a donut. I was somewhat relieved that he wasn't asking for my car, and given that I didn't have two dollar-bills, I gave him a ten.

Thoughts

I haven't decided what I think about begging.

Q: Are you worried that people will just buy drugs with your money?

A: No. I don't care if they buy drugs. I don't care if their stories are genuine. They obviously want money for something. I'm not going to judge whether that something is good. I assume they aren't trying to kill people with my money.

Q: Are you worried that begging favors people who ask for money over people who need money but don't ask for it?

A: I do worry about that. I think that I would be a sheepish beggar, reluctant to bother people, so by giving money to aggressive beggars, I'm selecting against my genetic kin. But if that was really my objection, then I suppose I would find myself donating money to bashful-beggar-causes. But I don't.

Q: Are you worried that beggars are crazy people?

A: Well, I think many of them are crazy, but that doesn't seem like a good reason not to give them money. If they are asking for money, they probably know how to use it.

Q: Do you think beggars are already supported by homeless shelters and such, and so they shouldn't be asking for money?

A: I assume they generally do use homeless shelters and such. I don't think it's wrong of them to also ask for money. I'm less concerned about whether they are morally justified in asking for money, and more concerned about whether I will give them any.

Q: Are you worried that you're making an insignificant dent in a much larger problem?

A: Yes. I do think giving money to a beggar is an insignificant dent in a much larger problem. And to the extent to which my normal activity is working toward a better future, the time I spend reaching into my wallet to get money for a beggar is taking time away from that pursuit. But, it isn't that much time. And I probably wouldn't have made that much progress anyway, because I would be walking around somewhere where beggars are located, rather than "working".

Q: Are you worried that you'll miss the money you give to a beggar?

A: No. Not to one beggar. Though, if I gave money to every person who asked, while walking in a big city, I suspect it might actually be a problem. I haven't done the calculation. In fact, that is one argument I've thought about against giving money to a beggar, which is, since I can't give to all of them, why this one. But I'm not sure that is actually a good argument against giving anything to anyone.

Q: Are you just annoyed by the physical interruption in your activity to give to a beggar?

A: Yes. But giving to charities is easier, and I don't do that either.. I haven't decided what I think about charities..

hm.. I think it's a difficult problem, I don't know what to do, I haven't had time to think about it — it hasn't been a priority — and so I end up doing whatever random thing my body naturally does, which is apparently ignoring crazy people on the street, but giving to people in more personal situations that take me by surprise..

I lived in Boston for seven years, and never once gave money to someone begging for money. [update: I read this line and think people may think I say it with pride. I am not proud of it. I am not unproud of it. It is just what happened.] I had developed incredibly thick skin against begging. A homeless person could meet me face to face on the street, and my gaze would look through them, as if they weren't there. Of course, the real reason for that was to avoid getting trapped in a conversation with a crazy person, which is hard to exit once entered without simply walking away mid-sentence.

There was also a guy who delivered a heart-wrenching oratory to everyone at a subway stop — he was trying to break out of being a deadbeat, and he had an interview setup at Macy's in a couple hours, but he needed money for a haircut. Just $10. I was tempted to pitch in. The guy really seemed genuine. But I didn't. Incidentally, I saw that same guy give a similarly well-delivered oratory another time in the future, so I'm less convinced his story was true.

San Francisco

After three months living in San Francisco, I have given away two ten dollar-bills, and a blanket.

The first incident happened as I was throwing out my trash one night. The event was more personal somehow. It was at my home. Nobody else was around. I told the guy I didn't have my wallet on me at the moment, which, it occurred to me, was not a very good excuse given that I could easily retrive it. Then the guy asked if I had a blanket. It occurred to me that I had thrown a blanket into the trash recently, so I felt bad denying him one.

I told him I would get him a blanket. I did. I also got him $10. And when I handed over the goods, I asked him "what do you do?" This seemed like an awkward question, since it didn't seem like he "did" anything. But he said he sold pot, but the police had confiscated his stash recently, so he didn't have any income at the moment. He was trying to get a court order to get his stash back, but it was taking time. He showed me his license for selling pot. I asked him about his opinion on the legalization of pot — since it affected his career. He said he voted for legalization, but that if it was legalized, he would probably need to find another job.

Now just today, I was dropping someone off in San Francisco near a donut shop, and a guy approached me on the driver's side asking for two dollars for a donut. I was somewhat relieved that he wasn't asking for my car, and given that I didn't have two dollar-bills, I gave him a ten.

Thoughts

I haven't decided what I think about begging.

Q: Are you worried that people will just buy drugs with your money?

A: No. I don't care if they buy drugs. I don't care if their stories are genuine. They obviously want money for something. I'm not going to judge whether that something is good. I assume they aren't trying to kill people with my money.

Q: Are you worried that begging favors people who ask for money over people who need money but don't ask for it?

A: I do worry about that. I think that I would be a sheepish beggar, reluctant to bother people, so by giving money to aggressive beggars, I'm selecting against my genetic kin. But if that was really my objection, then I suppose I would find myself donating money to bashful-beggar-causes. But I don't.

Q: Are you worried that beggars are crazy people?

A: Well, I think many of them are crazy, but that doesn't seem like a good reason not to give them money. If they are asking for money, they probably know how to use it.

Q: Do you think beggars are already supported by homeless shelters and such, and so they shouldn't be asking for money?

A: I assume they generally do use homeless shelters and such. I don't think it's wrong of them to also ask for money. I'm less concerned about whether they are morally justified in asking for money, and more concerned about whether I will give them any.

Q: Are you worried that you're making an insignificant dent in a much larger problem?

A: Yes. I do think giving money to a beggar is an insignificant dent in a much larger problem. And to the extent to which my normal activity is working toward a better future, the time I spend reaching into my wallet to get money for a beggar is taking time away from that pursuit. But, it isn't that much time. And I probably wouldn't have made that much progress anyway, because I would be walking around somewhere where beggars are located, rather than "working".

Q: Are you worried that you'll miss the money you give to a beggar?

A: No. Not to one beggar. Though, if I gave money to every person who asked, while walking in a big city, I suspect it might actually be a problem. I haven't done the calculation. In fact, that is one argument I've thought about against giving money to a beggar, which is, since I can't give to all of them, why this one. But I'm not sure that is actually a good argument against giving anything to anyone.

Q: Are you just annoyed by the physical interruption in your activity to give to a beggar?

A: Yes. But giving to charities is easier, and I don't do that either.. I haven't decided what I think about charities..

hm.. I think it's a difficult problem, I don't know what to do, I haven't had time to think about it — it hasn't been a priority — and so I end up doing whatever random thing my body naturally does, which is apparently ignoring crazy people on the street, but giving to people in more personal situations that take me by surprise..

1/24/13

learning machine learning

I'm doing some machine learning at work, but I haven't done machine learning in a while. So I'm learning. Here are some notes:

Q: I'll be classifying documents. How do I turn the words into "features"?

A: The most common approach seems to be having a separate feature for each word. For example, there would be a feature for the word "machine" and a different feature for the word "learning".

Q: This blog post has the word "machine", so what number would I put for the "machine" feature?

A: You could put "7", because "machine" occurs 7 times. Or you could put "1", because "machine" occurs at least once. You could also use tf-idf.

Q: What do you recommend?

A: Well, at the end of this paper is a section called: A Practical Quide to LIBLINEAR. After reading it, I'm leaning toward binary features, where "1" means the word is there and "0" means the word is not there, and then normalizing these values, so if a document has 10 words, then we'd put "1/sqrt(10)" for each word instead of "1".

Q: What is the format for LIBLINEAR training data?

A: Each line represents a document. The first number is the class of the document, e.g., "0" or "1". Then a space. Then a list of feature-value-pairs separated by spaces. Each feature-value-pair looks like this "32:3.141" where "32" is the index of the feature, and "3.141" is the value.

Q: It is giving me an error for this input: "0 0:1 5:1 3:1"

A: The features are 1-indexed, so you can't have a feature "0".

Q: It is still giving me an error: "0 1:1 5:1 3:1"

A: The features must be in order, e.g., "0 1:1 3:1 5:1".

Ok, great.. I write some lispy JavaScript to convert all 10,000 of my documents into word vectors, encountering an odd behavior in _.map, writing a post about that, and ultimately running:

./train -v 5 training_data.txt

yielding "Cross Validation Accuracy = 92.97%", which sounds great.

Now if I follow the advice from "A Practical Quide to LIBLINEAR" and normalize features for each document — that is, if we think of each document as a vector in a high-dimensional space, we want to make the vector have length one — and re-run:

./train -v 5 training_data.txt

we get "Cross Validation Accuracy = 94.82%", which suggests that the LIBLINEAR people were right. Go them.

Ok, now I think that it is using an SVM. But a friend, and co-worker, ran a Logistic Regression on this data and got really good accuracy, and I'd rather use that since I think I can extract from the Logistic Regression a meaningful coefficient for each feature that will tell me how "useful" that feature is. The Logistic Regression is accessed with the "-s 6" option:

./train -s 6 -v 5 training_data.txt

Cross Validation Accuracy = 94.76%. Not bad.

Now to see which features are the most useful. (Man, I have a raspberry seed stuck in my tooth. That always happens. Stupid raspberries. Yet I still eat them. Hm..)

Ok, so there are about 40,000 features, and only about 250 have no-zero coefficients. That's nice. Should make it efficient to classify stuff.

I would say which features are the most useful, but I'm not sure I should put that online. I'll not for now.

My friend plotted an ROC curve, and I wanted to understand that, so I went off to understand ROC curves...

Hm.. I notice that ROC curves do not include "precision". But I can see why that might be good. It could be that our classifier is identifying 100% of the problem items, while also falsely accusing the same number of other items. Hence, it would have 100% recall, but only 50% precision, since only half of the items it says are bad are actually bad.

Now 50% looks like low precision, but if there are 10,000 items, and only 100 of them are bad, and the system identifies 200 items as potentially bad, where all 100 bad items are in that set.. that's actually doing something pretty good, and the metric on the ROC curve shows that (the FPR would be 100/9900 = 0.01, where a low number is desirable for FPR).

Q: I'll be classifying documents. How do I turn the words into "features"?

A: The most common approach seems to be having a separate feature for each word. For example, there would be a feature for the word "machine" and a different feature for the word "learning".

Q: This blog post has the word "machine", so what number would I put for the "machine" feature?

A: You could put "7", because "machine" occurs 7 times. Or you could put "1", because "machine" occurs at least once. You could also use tf-idf.

Q: What do you recommend?

A: Well, at the end of this paper is a section called: A Practical Quide to LIBLINEAR. After reading it, I'm leaning toward binary features, where "1" means the word is there and "0" means the word is not there, and then normalizing these values, so if a document has 10 words, then we'd put "1/sqrt(10)" for each word instead of "1".

Q: What is the format for LIBLINEAR training data?

A: Each line represents a document. The first number is the class of the document, e.g., "0" or "1". Then a space. Then a list of feature-value-pairs separated by spaces. Each feature-value-pair looks like this "32:3.141" where "32" is the index of the feature, and "3.141" is the value.

Q: It is giving me an error for this input: "0 0:1 5:1 3:1"

A: The features are 1-indexed, so you can't have a feature "0".

Q: It is still giving me an error: "0 1:1 5:1 3:1"

A: The features must be in order, e.g., "0 1:1 3:1 5:1".

Ok, great.. I write some lispy JavaScript to convert all 10,000 of my documents into word vectors, encountering an odd behavior in _.map, writing a post about that, and ultimately running:

./train -v 5 training_data.txt

yielding "Cross Validation Accuracy = 92.97%", which sounds great.

Now if I follow the advice from "A Practical Quide to LIBLINEAR" and normalize features for each document — that is, if we think of each document as a vector in a high-dimensional space, we want to make the vector have length one — and re-run:

./train -v 5 training_data.txt

Ok, now I think that it is using an SVM. But a friend, and co-worker, ran a Logistic Regression on this data and got really good accuracy, and I'd rather use that since I think I can extract from the Logistic Regression a meaningful coefficient for each feature that will tell me how "useful" that feature is. The Logistic Regression is accessed with the "-s 6" option:

./train -s 6 -v 5 training_data.txt

Now to see which features are the most useful. (Man, I have a raspberry seed stuck in my tooth. That always happens. Stupid raspberries. Yet I still eat them. Hm..)

Ok, so there are about 40,000 features, and only about 250 have no-zero coefficients. That's nice. Should make it efficient to classify stuff.

I would say which features are the most useful, but I'm not sure I should put that online. I'll not for now.

My friend plotted an ROC curve, and I wanted to understand that, so I went off to understand ROC curves...

Hm.. I notice that ROC curves do not include "precision". But I can see why that might be good. It could be that our classifier is identifying 100% of the problem items, while also falsely accusing the same number of other items. Hence, it would have 100% recall, but only 50% precision, since only half of the items it says are bad are actually bad.

Now 50% looks like low precision, but if there are 10,000 items, and only 100 of them are bad, and the system identifies 200 items as potentially bad, where all 100 bad items are in that set.. that's actually doing something pretty good, and the metric on the ROC curve shows that (the FPR would be 100/9900 = 0.01, where a low number is desirable for FPR).

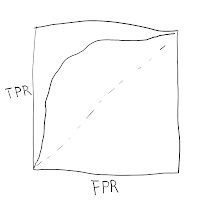

roc

I'm trying to understand ROC curves. They look kinda like this:

The TPR means True-Positive Rate. The FP means False-Positive Rate. I've said before that TP and FP confuse me. So let's start by trying to understand that. Here's a diagram to help me remember:

Ok, Wikipedia says TPR = "the fraction of true positives out of the positives". Seems like precision. But later it says "TPR is also known as sensitivity", where Wikipedia says sensitivity is the "probability of a positive test, given that the patient is ill." But that seems like recall. Alas. Which is it?

Poking around, I think that TPR is sensitivity, and FPR is related to specificity, i.e., it is 1 - specificity. What awful names. Why do people want names for related concepts that sound so much like each other?

Just to make sure I understand sensitivity and specificity: sensitivity is how accurate the test is on red dots, and specificity is how accurate the test is on grey dots.

So

TPR = sensitivity = recall.

FPR = 1 - specificity = 1 - "accuracy on grey dots" = "inaccuracy on grey dots"

Let's try to get some parallel construction:

TPR = probability of saying a red dot is red

FPR = probability of saying a grey dot is red

So both measures are probabilities of saying dots are red.

Fine. Now the ROC curve goes from the lower-left corner (0, 0), to the upper-right corner (1, 1). What does the lower-left corner mean? Low TPR and low FPR.. that means we're unlikely to say anything is red. That seems easy to achieve. Just never say anything is red. So that must be what happens when the threshold of our test is too high, such that nothing ever meets it.

The upper-right corner means high TPR and high FPR.. that means we're very likely to say things are red. That also seems easy to achieve. Just always say everything is red. So that must be what happens when the threshold of our test is too low, such that everything meets it.

So I suppose if we try different threshold values from low to high, we'll sortof draw a line from the upper-right corner to the lower-left corner. Perhaps it is easier to think of trying different threshold values from high to low so that we draw a curve going to the right.

The TPR means True-Positive Rate. The FP means False-Positive Rate. I've said before that TP and FP confuse me. So let's start by trying to understand that. Here's a diagram to help me remember:

Poking around, I think that TPR is sensitivity, and FPR is related to specificity, i.e., it is 1 - specificity. What awful names. Why do people want names for related concepts that sound so much like each other?

Just to make sure I understand sensitivity and specificity: sensitivity is how accurate the test is on red dots, and specificity is how accurate the test is on grey dots.

So

TPR = sensitivity = recall.

FPR = 1 - specificity = 1 - "accuracy on grey dots" = "inaccuracy on grey dots"

Let's try to get some parallel construction:

TPR = probability of saying a red dot is red

FPR = probability of saying a grey dot is red

So both measures are probabilities of saying dots are red.

Fine. Now the ROC curve goes from the lower-left corner (0, 0), to the upper-right corner (1, 1). What does the lower-left corner mean? Low TPR and low FPR.. that means we're unlikely to say anything is red. That seems easy to achieve. Just never say anything is red. So that must be what happens when the threshold of our test is too high, such that nothing ever meets it.

The upper-right corner means high TPR and high FPR.. that means we're very likely to say things are red. That also seems easy to achieve. Just always say everything is red. So that must be what happens when the threshold of our test is too low, such that everything meets it.

So I suppose if we try different threshold values from low to high, we'll sortof draw a line from the upper-right corner to the lower-left corner. Perhaps it is easier to think of trying different threshold values from high to low so that we draw a curve going to the right.

false positive 2

I said before that I have some confusion about True Positives and False Negatives and such. I think I finally identified my confusion.

This is what I often think is going on:

"True" answers the question: is this dot red?

"Positive" answers the question: did the test say the dot was red?

But I'm wrong.

Here is what "True" actually refers to:

That is, "True" answers the question: did the test say the correct thing about the dot?

I think a less confusing word would be "Correct" or "Accurate", as in Correct-Positive: a positive result that is correct.

This is what I often think is going on:

"True" answers the question: is this dot red?

"Positive" answers the question: did the test say the dot was red?

But I'm wrong.

Here is what "True" actually refers to:

That is, "True" answers the question: did the test say the correct thing about the dot?

I think a less confusing word would be "Correct" or "Accurate", as in Correct-Positive: a positive result that is correct.

1/23/13

_.map

Underscore's _.map function can take an object or an array as input, but it always returns an array.

So

_.map({a : 1, b : 4, c : 9}, Math.sqrt)

returns

[1, 2, 3]

instead of

{a : 1, b : 2, c : 3}

alas.. why underscore? WHY?

update: ugg.. it is worse than I thought. Underscore treats objects with a "length" as arrays.

So

var tokenCounts = {"hello" : 5, "world" : 3, "length" : 1, "0" : 4}

var sum = 0

_.each(tokenCounts, function (count) { sum += count })

alert(sum)

shows

4

because it sees a "length" of 1, and therefore uses a for-loop with i going from 0 to length - 1.

as for WHY, it is because some DOM and jQuery functions return things that look like arrays, but are not real arrays, and Underscore wants _.each to work on them "as expected".

I'm not sure what should be done here. I feel like the right answer is: do a better job detecting array-like-objects. Checking for "length" isn't enough. There must be some other indicator...

1/22/13

no master

Bwahaha! Thanks to this post, I can host JavaScript projects on github a little more cleanly, by removing the master!

Here is my first attempt using this style, which can be viewed here.

Ahem, let me start again to be more clear:

Problem: I put a JavaScript thing on github like this, but to view it, I need to wrap it like this — note the http://htmlpreview.github.com/? at the beginning of the url. I could put the code in a gh-pages branch, but then I feel like people will be confused when they go to the repository on github and see nothing in it, because there is nothing in the master branch, which is what they see by default.

Solution: First, it turns out we can make the gh-pages branch the default. This setting is in the Settings tab, right beneath the repository name changer. Second, it turns out we can remove the master branch entirely from github, so that nobody accidentally checks out that branch, by doing this: git push origin :master. I then delete my local repo and re-clone it.

Update: It is possible to create a gh-pages branch, set it to the default, and delete the master branch, all from the github website :)

Here is my first attempt using this style, which can be viewed here.

Ahem, let me start again to be more clear:

Problem: I put a JavaScript thing on github like this, but to view it, I need to wrap it like this — note the http://htmlpreview.github.com/? at the beginning of the url. I could put the code in a gh-pages branch, but then I feel like people will be confused when they go to the repository on github and see nothing in it, because there is nothing in the master branch, which is what they see by default.

Solution: First, it turns out we can make the gh-pages branch the default. This setting is in the Settings tab, right beneath the repository name changer. Second, it turns out we can remove the master branch entirely from github, so that nobody accidentally checks out that branch, by doing this: git push origin :master. I then delete my local repo and re-clone it.

Update: It is possible to create a gh-pages branch, set it to the default, and delete the master branch, all from the github website :)

sleep

Following up on this post, here is a link to my sleep patterns.

It is currently a google spreadsheet. Here is my improvement plan:

I want to write HumanScripts to do these things. My HumanScripting has been dormant for a while as I've been trying to transition to a different way of hiring people.

I had hired a small pool of JavaScript programmers, and would post jobs to a mailing list, but I got stuck when I wanted to post scripts that required other knowledge (e.g. android development). Also, my JavaScript programmers seem to have stopped checking my mailing list, perhaps because I don't post jobs often enough.

So my new plan is to post each HumanScript as a new job on oDesk, but using the API to make it easier. I've successfully posted a job, retrieved the list of offers, hired someone, sent them a message saying "begin!", and paid them, but I'm currently stuck ending the contract. I can end the contract without feedback, but I don't know how to end the contract with feedback.

It is currently a google spreadsheet. Here is my improvement plan:

- Create visualizer hosted on github.

- Have visualizer load data from google spreadsheet with something like this.

- Create android app that let's me say when I'm sleeping or waking up, that posts to google spreadsheet.

I want to write HumanScripts to do these things. My HumanScripting has been dormant for a while as I've been trying to transition to a different way of hiring people.

I had hired a small pool of JavaScript programmers, and would post jobs to a mailing list, but I got stuck when I wanted to post scripts that required other knowledge (e.g. android development). Also, my JavaScript programmers seem to have stopped checking my mailing list, perhaps because I don't post jobs often enough.

So my new plan is to post each HumanScript as a new job on oDesk, but using the API to make it easier. I've successfully posted a job, retrieved the list of offers, hired someone, sent them a message saying "begin!", and paid them, but I'm currently stuck ending the contract. I can end the contract without feedback, but I don't know how to end the contract with feedback.

1/21/13

worry as a guide

When I have lots of things on my todo list, such that I can't do them all, I think about how to prioritize them. But it occurs to me that what I feel as "worry" may actually be my brain's native prioritization mechanism.

I worry that I worry about unimportant things, but I feel like when I convince myself that something really is unimportant, I also stop worrying about it.

I just hate the feeling of worry. Yet, I hate the feeling of being burned too, and maybe worry is sortof like feeling hot, as a warning that I might get burned.

I suppose if I'm feeling worried a lot, I'm playing too close to the fire.

handwaves

A friend mentioned that blinking and forehead movements are a big issue in EEG setups, and much care needs to be taken to remove such signals in order to see "true" brain activity. The fact that I see some sort of signal coming from the NeuroSky MindWave Mobile when it is hooked up to my hand is unsettling to me, and causes me to be skeptical of what I see when this device is hooked up to my head.

webkit bug

This seems like a bug in Webkit — Chrome 24.0.1312.52 or Safari 6.0.2 (7536.26.17).

Here's the code to reproduce.

Here's the code to reproduce.

i am twelfth

Small world: the person behind me in line today at Walgreens was a friend from college who I hadn't seen in several years.

Their e-mail address is something like iamfourth@gmail.com, but to protect their identity, it may not be that. But I liked the idea of "i am n'th" as a username, and I wondered how low of such a username was still available on google.

The answer, it turns out, is twelfth. Lower than I thought. Assuming I spelled that correctly.

Their e-mail address is something like iamfourth@gmail.com, but to protect their identity, it may not be that. But I liked the idea of "i am n'th" as a username, and I wondered how low of such a username was still available on google.

The answer, it turns out, is twelfth. Lower than I thought. Assuming I spelled that correctly.

1/20/13

math vs programming

As nice as math looks, I think the math and scientific community should agree on some standard conversion of math into latin characters. It would also be nice to make an effort to convert all of mankind's proofs into a standard machine-processable form that is essentially a computer program.

Q: Don't you just mean that it would be more accessible to an audience of people like you, who know how to program?

A: Well, sortof. But with an open source program, I feel like an ambitious person can, in principle, figure out what is going on, as long as they understand the programming language in use (where the programming language itself tends to be relatively small, and well documented). All the information they need is there. But with a math paper that someone writes and publishes, it may be theoretically impossible for someone to understand without happening to have taken some class or read some book that explains some of the crazy symbols and shortcut notations.

That way, when people look at some mathy thing, they're not stuck wondering "what does that crazy looking symbol mean?" with no way of looking it up.

Also, if someone is not sure what a formula means, or isn't convinced that it is true, they can inspect the proof-program to understand it better.

I feel like this would make math less opaque, and more accessible to a wider audience.

Q: Don't you just mean that it would be more accessible to an audience of people like you, who know how to program?

A: Well, sortof. But with an open source program, I feel like an ambitious person can, in principle, figure out what is going on, as long as they understand the programming language in use (where the programming language itself tends to be relatively small, and well documented). All the information they need is there. But with a math paper that someone writes and publishes, it may be theoretically impossible for someone to understand without happening to have taken some class or read some book that explains some of the crazy symbols and shortcut notations.

zeno's paradox revisited

I like to solve paradoxes while trying to sleep. Sometime when I don't have a paradox to work on, I go back over old paradoxes to make sure I know how to solve them.

Recently I revisited Zeno's paradox. I usually think of the paradox as trying to catch up with some moving thing, which first requires getting to where it is now, which takes some time, and then getting to where it went during that time, which takes more time, and then getting to where it went during that time, and so on.

I'm usually satisfied with the argument that although there are an infinite number of imaginary way-points to get to, they will be gotten to more and more rapidly, such that the total time it takes to catch up with the moving thing is limited.

However, I got tripped up on the following idea: what if we actually step to the first way-point with our right foot, and then step to the next way-point with our left foot, and the next with our right, and so on.

In this case, will we ever catch up with the moving thing, and if so, what foot will we land on when we do?

One could argue that at some point it will become physically impossible to take a step of the required length at the required speed, but in principle, it seems physically possible to carry out this experiment. So in principle, what would happen?

My current leaning is that if space-time were not quantized, this would actually be an issue.

Recently I revisited Zeno's paradox. I usually think of the paradox as trying to catch up with some moving thing, which first requires getting to where it is now, which takes some time, and then getting to where it went during that time, which takes more time, and then getting to where it went during that time, and so on.

I'm usually satisfied with the argument that although there are an infinite number of imaginary way-points to get to, they will be gotten to more and more rapidly, such that the total time it takes to catch up with the moving thing is limited.

However, I got tripped up on the following idea: what if we actually step to the first way-point with our right foot, and then step to the next way-point with our left foot, and the next with our right, and so on.

In this case, will we ever catch up with the moving thing, and if so, what foot will we land on when we do?

One could argue that at some point it will become physically impossible to take a step of the required length at the required speed, but in principle, it seems physically possible to carry out this experiment. So in principle, what would happen?

My current leaning is that if space-time were not quantized, this would actually be an issue.

on the fence

I sit on the fence. I feel like our culture looks down on fence sitters. "Take a stand!" "Don't be wishy-washy!" "Pick something, and go with it!"

I feel like both sides of every issue have merit. I feel like important issues are generally super complicated, and nobody really understands them.

My philosophical center is a lack of certainty about everything.

Q: Are you sure that is a good way to be?

A: No.

1/17/13

arrangance

A friend quoted me on Facebook as saying "At some point I decided I am 'smart' at math". I read that and felt a twinge of "man, that sounds arrogant".

However, I did say that. And I am arrogant about math. But in context, I was trying to argue that arrogance is a useful tool for understanding things.

Here's a caricature of what I mean:

♔ : I think I'm bad at math. Oh, here's an explanation of a math thing. I don't understand it. I guess I am bad at math. Oh, here's someone talking about a math thing. I didn't understand what they said. I guess I'll nod my head so they don't realize I'm bad at math.

♛ : I think I'm good at math. Oh, here's an explanation of a math thing. I don't understand it. What a crappy explanation. I'll try reading something else.. [time passes].. [much more time passes].. man, I finally understand that. I guess I am good at math! Oh, here's someone talking about a math thing. I didn't understand what they said. I'll tell them so, and ask them to explain it.

However, I did say that. And I am arrogant about math. But in context, I was trying to argue that arrogance is a useful tool for understanding things.

Here's a caricature of what I mean:

♔ : I think I'm bad at math. Oh, here's an explanation of a math thing. I don't understand it. I guess I am bad at math. Oh, here's someone talking about a math thing. I didn't understand what they said. I guess I'll nod my head so they don't realize I'm bad at math.

♛ : I think I'm good at math. Oh, here's an explanation of a math thing. I don't understand it. What a crappy explanation. I'll try reading something else.. [time passes].. [much more time passes].. man, I finally understand that. I guess I am good at math! Oh, here's someone talking about a math thing. I didn't understand what they said. I'll tell them so, and ask them to explain it.

1/15/13

bad

"If I say something, and someone says it is bad, then I stop talking about that line of thought." What do I mean by bad?

While in grad school, a friend said they thought a presentation I made was bad. Really bad. And this person does not mince words. And I put a lot of effort and pride into my presentations. Yet I did not stop showing them my presentations, and was grateful that they said it was bad.

Here are some forms of "bad":

If I sense one of the first four, I shut down. If I sense the last one, I find the criticism useful.

While in grad school, a friend said they thought a presentation I made was bad. Really bad. And this person does not mince words. And I put a lot of effort and pride into my presentations. Yet I did not stop showing them my presentations, and was grateful that they said it was bad.

Here are some forms of "bad":

- This is bad, please don't show me similar things in the future.

- This is bad, and causes me to respect you less.

- This is bad, and suggests you should stop trying.

- This is objectively bad, and the fact that you don't see it means you should listen to me.

- I happen to dislike this particular thing, perhaps a lot, and I may not even be able to explain why, but I'm glad you showed me, and I want to see future things you do, even if they are similar to this.

If I sense one of the first four, I shut down. If I sense the last one, I find the criticism useful.

complete

Gödel suggests that we can either be consistant, or complete. We choose consistance. But why not completeness? Completeness has some cons, like everything can be proven true, and proven false. But on the plus side, we can describe everything.

sleeping

I wrote recently about sleeping troubles, which I was trying to fix with melatonin. Something wasn't working about that. A couple times, I would take the melatonin, successfully fall asleep, but then wake up three hours later unable to sleep. I feel like the melatonin wasn't actually adjusting my biological clock.

A friend suggested a new strategy: follow my "natural" sleep schedule. My objection to this was meetings. They suggested meetings might not be as important as following my natural sleep schedule.

So I'm going to try this. I plan to setup a place for people to see when I go to bed, and when I wake up, so they can gauge when to meet.

I'm actually quite excited about this. I feel like this issue has been the bane of my existence. I hate trying to force myself to sleep before I'm tried, and I generally fail when I try, and I truly hate — with a passion difficult to exaggerate — waking up before I'm awake. It also makes me tired and inefficient.

Anyway, I always get excited about trying new life strategies, but usually they're a bit theoretical, and this one feels a bit like deciding to remove a large thorn from my side.

A friend suggested a new strategy: follow my "natural" sleep schedule. My objection to this was meetings. They suggested meetings might not be as important as following my natural sleep schedule.

So I'm going to try this. I plan to setup a place for people to see when I go to bed, and when I wake up, so they can gauge when to meet.

I'm actually quite excited about this. I feel like this issue has been the bane of my existence. I hate trying to force myself to sleep before I'm tried, and I generally fail when I try, and I truly hate — with a passion difficult to exaggerate — waking up before I'm awake. It also makes me tired and inefficient.

Anyway, I always get excited about trying new life strategies, but usually they're a bit theoretical, and this one feels a bit like deciding to remove a large thorn from my side.

forehead input

I'm repeating an idea a friend's friend apparently implemented long ago. But I think the idea is still awesome now, and it makes me want to hack together something electrical.

The idea is a "screen" you put on your forehead that essentially uses your skin as a retina, and each "pixel" pokes the forehead there with a mild electric shock.

And apparently this worked.

It could "show" the time, and weather, and whether someone is behind you. Maybe friends could send messages to your forehead.

And I'm bald, so I could have an even larger "display".

judging

I want to have my brain wired to someone else. I'm interested in the experience. I had also thought that there would be the potential for a new conscious entity to arise that thought it was both people, but I'm less optimistic about that now after a friend pointed out the structural role of the prefrontal cortex in consciousness, and that there might need to be a new structure added.. though.. thinking about it now as I write.. perhaps the prefrontal cortex itself could be wired to someone else's prefrontal cortex?

Anyway, I was talking to a friend about this. They think the idea is unconventional — not something most people would want to do.

They were speculating about why I would want my brain wired to someone else, and given my relatively anti-social nature, hypothesized that I really am social, and since I have trouble forming bonds in conventional ways, I want to do so in this unconventional way.

I thought about this. I responded that I do value deep connections with people, and wish I could have even deeper connections.

And I do find forging deep connections difficult.

I thought about my main obstacle for getting close to people. I said that the ultimate barrier, for me, is judgement. If I say something, and someone says it is bad, then I stop talking about that line of thought. I still have the line of thought, but I feel like I can't share it with that person, because I don't want them to think badly of me.

But the brain wiring thing is a bit different. I'm interested in consciousness, and I feel like wiring my brain to someone else would be one of many experiments to try and explore the nature of consciousness.

Anyway, I was talking to a friend about this. They think the idea is unconventional — not something most people would want to do.

They were speculating about why I would want my brain wired to someone else, and given my relatively anti-social nature, hypothesized that I really am social, and since I have trouble forming bonds in conventional ways, I want to do so in this unconventional way.

I thought about this. I responded that I do value deep connections with people, and wish I could have even deeper connections.

And I do find forging deep connections difficult.

I thought about my main obstacle for getting close to people. I said that the ultimate barrier, for me, is judgement. If I say something, and someone says it is bad, then I stop talking about that line of thought. I still have the line of thought, but I feel like I can't share it with that person, because I don't want them to think badly of me.

But the brain wiring thing is a bit different. I'm interested in consciousness, and I feel like wiring my brain to someone else would be one of many experiments to try and explore the nature of consciousness.

input

I complained recently about lacking good sources of input. Today, a friend showed me some personal blog-style posts they had written.

I read every one of them all the way through. I tried to articulate what I found so good about them. My thought was that they were written by my friend as if to himself. They weren't really meant to be read. In that sense they were "real".

I could see having a dialog with someone, or a group, where everyone is really just writing to themselves, but letting other people see what they write.

I read every one of them all the way through. I tried to articulate what I found so good about them. My thought was that they were written by my friend as if to himself. They weren't really meant to be read. In that sense they were "real".

I could see having a dialog with someone, or a group, where everyone is really just writing to themselves, but letting other people see what they write.

1/14/13

blinkwaves

That anomaly in the middle is me blinking. The NeuroSky MindWave Mobile can detect blinks. Also, if I hold my eyes in a squint, I can get the signal to oscillate quite a bit, so I suppose it can detect squinting too.

Now, NeuroSky doesn't hide the fact that they can detect blinks. In fact, it sends a special "blink detected" signal when this happens. But I'm worried that this device is just detecting the configuration of my forehead. It definitely is influenced a lot by the way my forehead moves, and I can't seem to correlate it with anything else, like how tired or awake I am.

On a positive note, I am quite pleased with the code I wrote to achieve a real-time brainwave (or more probably blinkwave) visualizer using node.js, socket.io, and an html canvas. Node.js listens for signals from the headset, sends then via socket.io to a webpage, which draws them on a canvas.

1/13/13

javascript tennis

Here are some examples of Photoshop tennis. A friend wanted to try learning JavaScript by playing JavaScript tennis. So we're playing here.

NOTE: Click the images below to see the code in action. Code is loaded directly from github using this html preview tool that a friend pointed me to.

Round 1 (me): I started. This was a bit of code I wrote for a friend — the same friend who pointed me to the html preview tool — to give to his nephew, who was interested in learning to program. The idea was to create a sort of "hello world" with graphics moving in a canvas, which could be used as a starting point for creating a simple game — which in a meta-sense, it was, here.

Round 2 (paul): Paul felt my circles were too chaotic, and restricted them to a grid, and restricted the sizes of the circles to stay in the grid.

Round 3 (me): I felt Paul wasn't paying enough attention to the user, and made the circles more likely to appear around the mouse. Unfortunately the screenshot doesn't include the mouse cursor, but I was hovering in the upper-right corner.

Round 4 (paul): Paul made the visualization more gaussian. The tip of the gaussian follows the mouse.

Round 5 (me): I fixed Paul's ceiling-hanging gaussian to be upright like normal (chuckle), and I added buttons to adjust the color.

NOTE: Click the images below to see the code in action. Code is loaded directly from github using this html preview tool that a friend pointed me to.